Capstone Project Update #3: Letting the Cars "See"

Jump to a section in this update

Since the last update, I’ve been hard at work further refining the map editor and implementing traffic signals and car percepts. More specifically, stoplights and stop signs have been officially integrated in both the path system and the map editor. Cars are able to detect when there is a traffic signal ahead, and can “see” cars in front of them.

Traffic Signals

While in the last update I had already modeled the stop signs/lights and set up timers for the stoplights, there was no integration between them and the rest of the road system. They would essentially just sit on top of the intersection and spruce up the otherwise dull scenery.

Now, traffic signals can be directly added to three and four-way intersections in the map editor, and can be toggled through by clicking on the intersection. On top of that, I’ve programmed the surrounding paths to store a reference to the adjacent traffic signal, alerting cars when they’re headed towards one.

And of course, traffic signals are saved when the map is saved, and placed properly when loaded in.

Car Percepts

While visually stunning, the cars previously shown had no way of perceiving the world. Their behaviour consisted exclusively of following the defined path and choosing a random direction at intersections, clipping through other cars in their endless mission to arrive nowhere. Now, however, the cars have gained the power of sight–sort of.

The percepts system hasn’t been fully finished, but as of now the cars are able to perceive a few different things, which will soon be fed into a neural network and used to train the cars.

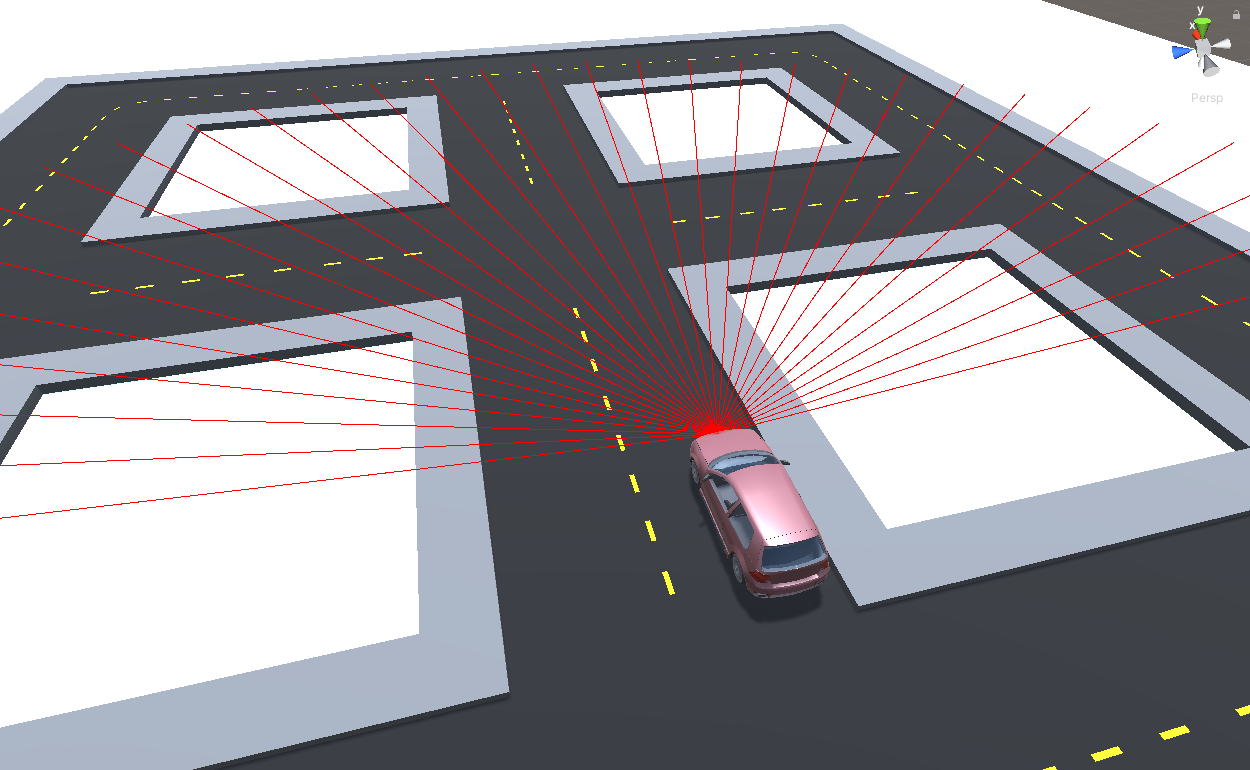

Raycasts

In order to detect other cars in the world, each vehicle has been outfitted with several raycasts that originate from the car’s front and point out in different directions. For those unfamiliar, a raycast is a method of detecting other objects in Unity, shooting a laser out from an origin point in a specified direction and returning if it detects a collision.

While configurable, and potentially subject to change, the cars currently have 31 raycasts each, with one pointing straight from the center and the other 30 directed at incrementally greater angles until reaching the peripheral of the car (85o).

Detecting traffic signals

As mentioned above, traffic signals are referenced by adjacent paths, which in turn allow the vehicles to detect when they are approaching a stoplight or stop sign. In other words, cars can now “see” what colour the stoplight they’re approaching is, or if they’re coming up to a stop sign.

Notice also that traffic signals work as expected for three way intersections.

Car Behaviour as of Now

The current car behaviour is very trivial, but reflective of the knowledge provided by the percepts implemented so far. Here is a brief demo of a small simulation.

The vehicles here are making use of three major sets of percepts:

- Proximity to path and node tracking

- This allows the cars to proceed in the lane. Currently they are just navigating towards the position of the next node in the path.

- Raycast obstacle detection

- When a vehicle is sufficiently close in front of the car, the car stops to avoid hitting it.

- Traffic signal detection

- If a car arrives at a red light, it stops until the light turns green.

- When arriving at a stop sign, the car waits three seconds before proceeding.

Currently, the simple logic I’ve implemented makes for a pretty reliable, but shoddy traffic simulator. Using these same percepts–along with a few others–as the inputs for a neural network, however, will hopefully give rise to some interesting, complex, and “intelligent” behaviour.

What’s Next

The last thing I need to do before officially incorporating ML-Agents into the project is implement some functionality for a car to detect when it has made an error. During the training, the vehicles will be given a negative reward for a variety of mistakes (straying too far from the path, running a red light, hitting another vehicle, etc.), but they will have no idea they’ve done so until I implement a way to detect these mistakes.

Once that’s completed, I’ll be able to begin implement ML-Agents functionality into the project, and soon thereafter start training the agents!

Here’s an update to the remainder of my planned timeline for the project.

| february | march | april | may | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Task | Week 1 | Week 2 | Week 3 | Week 4 | Week 1 | Week 2 | Week 3 | Week 4 | Week 1 | Week 2 | Week 3 | Week 4 | Week 1 |

| Simulation Setup | |||||||||||||

| ML-Agents Setup | |||||||||||||

| ML-Agents Training & Testing | |||||||||||||

| Integrate ML-Agents into Simulation | |||||||||||||

| Polish | |||||||||||||

| User Testing | |||||||||||||

| Done! | |||||||||||||

Regarding changes to the timetable

I’m realizing as the weeks go on that my planned timetable has been a little optimistic. More specifically, setting up the map editor and getting it in good shape have taken longer than I expected, and as a consequence other tasks have had their respective timeframes delayed and/or reduced. Fortunately, I allotted myself quite a lot of time for training and testing the agents, so I’m hopeful that the process will be easier than I thought it would be. If things don’t go smoothly, it will likely entail working overtime to get the project done by May.

Challenges

To speak more to the above, I’ll go over some of the more difficult tasks I’ve encountered so far:

- Implementing a way for roads to automatically recognize and connect to other roads ended up being quite a challenge, and a pretty good hands-on lesson in OOP concepts.

- This is described more in detail in a previous update here

- My solution ended up being a proper inheritance system, where each road type derives from a base road class, each implementing its own method for updating when adjacent roads are placed and converting to different road types.

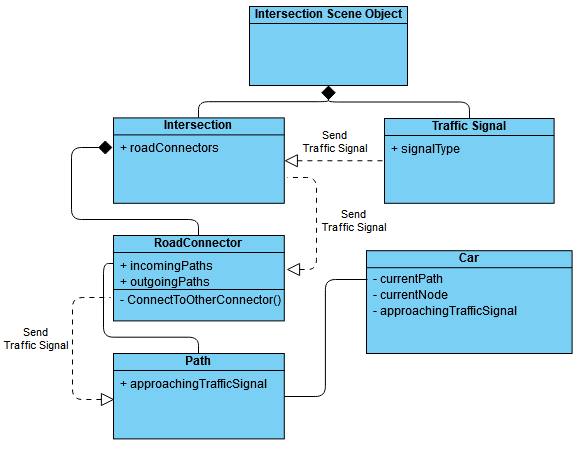

- Finding a way to communicate traffic signals to the cars also proved to be challenging.

- All the cars are really able to detect about the road is what path they’re connected to. To communicate the status of an approaching traffic signal, I passed a reference of the traffic signal to the intersection’s road connections, which then pass the reference onto incoming paths.

- That looks something like this:

These challenges aside, so far the project has progressed smoothly (albeit slowly). I’ll be begininng to implement the machine learning aspect of the simulation soon, so stay tuned to see how it goes.